- Sort Score

- Result 10 results

- Languages All

Results 1 - 10 of 317 for standards (0.12 sec)

-

docs/de/docs/features.md

# Merkmale { #features } ## FastAPI Merkmale { #fastapi-features } **FastAPI** ermöglicht Ihnen Folgendes: ### Basiert auf offenen Standards { #based-on-open-standards }Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Sat Oct 11 17:48:49 UTC 2025 - 10.9K bytes - Viewed (0) -

docs/de/docs/tutorial/schema-extra-example.md

Zuvor unterstützte es nur das Schlüsselwort `example` mit einem einzigen Beispiel. Dieses wird weiterhin von OpenAPI 3.1.0 unterstützt, ist jedoch <abbr title="deprecatet – veraltet, obsolet: Es soll nicht mehr verwendet werden">deprecatet</abbr> und nicht Teil des JSON Schema Standards. Wir empfehlen Ihnen daher, von `example` nach `examples` zu migrieren. 🤓

Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Wed Dec 24 10:28:19 UTC 2025 - 10.6K bytes - Viewed (0) -

docs/de/docs/tutorial/path-params.md

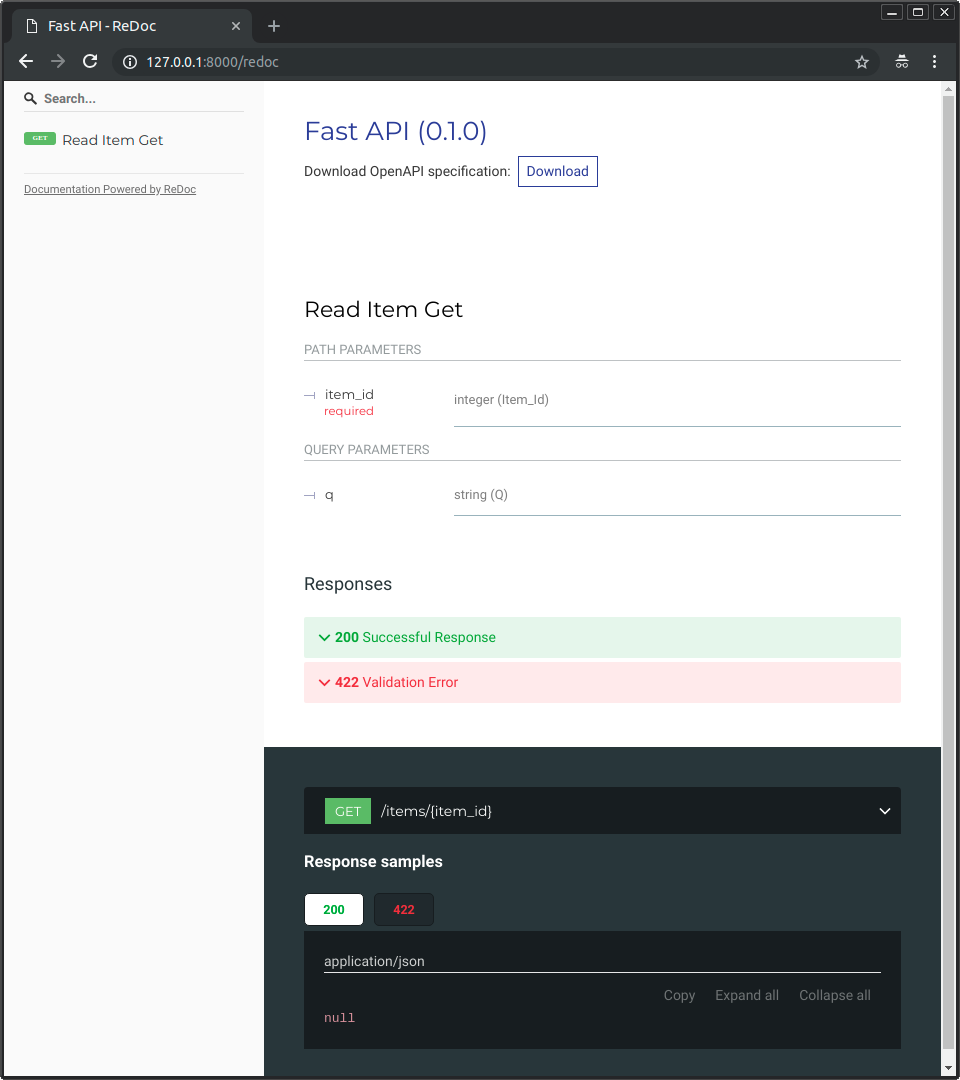

Beachten Sie, dass der Pfad-Parameter dort als Ganzzahl deklariert ist. /// ## Nützliche Standards, alternative Dokumentation { #standards-based-benefits-alternative-documentation } Und weil das generierte Schema vom <a href="https://github.com/OAI/OpenAPI-Specification/blob/master/versions/3.1.0.md" class="external-link" target="_blank">OpenAPI</a>-Standard kommt, gibt es viele kompatible Tools.Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Wed Dec 17 20:41:43 UTC 2025 - 10.5K bytes - Viewed (0) -

README.md

3. **Follow** the coding standards: `mvn formatter:format` 4. **Add** comprehensive tests for new functionality 5. **Commit** your changes: `git commit -m 'Add amazing feature'` 6. **Push** to the branch: `git push origin feature/amazing-feature` 7. **Submit** a Pull Request with detailed description ### Code Standards - Follow the project's Eclipse formatter configuration

Registered: Sat Dec 20 08:55:33 UTC 2025 - Last Modified: Sun Aug 31 02:56:02 UTC 2025 - 12.7K bytes - Viewed (0) -

docs/fr/docs/features.md

Tout est basé sur la déclaration de type standard de **Python 3.8** (grâce à Pydantic). Pas de nouvelles syntaxes à apprendre. Juste du Python standard et moderne. Si vous souhaitez un rappel de 2 minutes sur l'utilisation des types en Python (même si vous ne comptez pas utiliser FastAPI), jetez un oeil au tutoriel suivant: [Python Types](python-types.md){.internal-link target=_blank}. Vous écrivez du python standard avec des annotations de types: ```Python

Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Sat Oct 11 17:48:49 UTC 2025 - 11.1K bytes - Viewed (0) -

docs/de/docs/tutorial/security/oauth2-jwt.md

## Zusammenfassung { #recap } Mit dem, was Sie bis hier gesehen haben, können Sie eine sichere **FastAPI**-Anwendung mithilfe von Standards wie OAuth2 und JWT einrichten. In fast jedem Framework wird die Handhabung der Sicherheit recht schnell zu einem ziemlich komplexen Thema.Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Wed Oct 01 15:19:54 UTC 2025 - 12.7K bytes - Viewed (0) -

docs/en/docs/index.md

Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Thu Dec 25 11:01:37 UTC 2025 - 23.5K bytes - Viewed (0) -

docs/en/docs/tutorial/security/oauth2-jwt.md

And you can use and implement secure, standard protocols, like OAuth2 in a relatively simple way.

Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Mon Sep 29 02:57:38 UTC 2025 - 10.6K bytes - Viewed (0) -

docs/de/docs/tutorial/first-steps.md

### OpenAPI { #openapi } **FastAPI** generiert ein „Schema“ mit all Ihren APIs unter Verwendung des **OpenAPI**-Standards zur Definition von APIs. #### „Schema“ { #schema } Ein „Schema“ ist eine Definition oder Beschreibung von etwas. Nicht der eigentliche Code, der es implementiert, sondern lediglich eine abstrakte Beschreibung.Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Wed Dec 17 20:41:43 UTC 2025 - 14.3K bytes - Viewed (0) -

docs/de/docs/index.md

Registered: Sun Dec 28 07:19:09 UTC 2025 - Last Modified: Fri Dec 26 09:39:53 UTC 2025 - 25.8K bytes - Viewed (1)